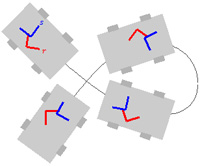

Our calibration algorithm recovers the rigid 3-DOF transformation (offset and rotation) between pairs of sensors mounted rigidly in a common plane on a mobile robot. In the diagram on the right, these sensors are represented by the red and blue reference frames, r and s. The algorithm requires only a set of sensor observations made as the robot moves along a suitable path. Our method does not require synchronized sensors; nor does it require complete metrical reconstruction of the environment or the sensor path. We show that incremental pose measurements alone are sufficient to recover sensor calibration through nonlinear least squares estimation. We use the Fisher Information Matrix to compute a Cramer-Rao lower bound (CRLB) for the resulting calibration.

Applying the algorithm in practice requires a non-degenerate motion path, a principled procedure for estimating per-sensor pose displacements and their covariances, a way to temporally resample asynchronous sensor data, and a way to assess the quality of the recovered calibration. We give constructive methods for each step. We demonstrate and validate the end-to-end calibration procedure for both simulated and real LIDAR and inertial data, achieving CRLBs, and corresponding calibrations, accurate to millimeters and milliradians.